Usually, programmers need to analyze a website to extract meaningful information, and this is possible with the help of URLs. URLs are an essential component of the internet that depicts some characteristics of the website resources and allows users to navigate websites.

URLs or Uniform Resource Locators are unique identifiers of a resource through the internet. In Python, programmers can split an URL string into components to extract meaningful information or join URL components into a URL string.

It is known as parsing. Python provides built-in libraries to split the URLs into different parts and reconstruct them. This article will help programmers learn how to parse URLs and rejoin them using built-in Python modules and libraries.

Parsing URLs in Python

Programmers parse URLs when they want to divide the URL into separate components to extract information. These characteristics of the URL components can be the scheme, domain, path, and query parameters.

It helps them analyze the details, manipulate them, or they can construct a new URL. Several programmers find this technique essential in various web development fields.

These tasks can be web scraping, general app development, or integrating URLs with an API. Using Python, programmers can use the powerful built-in libraries for URL parsing.

Python offers a library called urllib.parse. It is part of the larger urllib module, providing a collection of functions. These functions allow users to reconstruct URLs into their components.

The urllib.parse module describes some Python functions which come under these two categories, URL parsing and URL quoting. The article will provide in-depth details about these techniques in the following sections.

But before jumping into these techniques, first, let us understand how urllib.parse works. This module offers a standard interface to split Uniform Resource Locator (URL) strings into components or again reconstruct these into a URL string.

It also provides built-in functions to transform a "relative URL" to an absolute URL given a "base URL."

Using this Python module, programmers can perform the following URL schemes:

- file

- ftp

- gopher

- hdl

- http

- https

- imap

- mailto

- mms

- news

- nntp

- prospero

- rsync

- rtsp

- rtspu

- sftp

- shttp

- sip

- sips

- snews

- svn

- svn+ssh

- telnet

- wais

- ws

- wss

Using the urlparse() function

To use this function, programmers need to import the urllib.parse library. The function parses an URL into six segments by returning a 6-tuple. It corresponds to the typical syntax of the URL. This tuple item indicates a string.

Code Snippet:

from urllib.parse import urlparse

a = "https://sample.com/path/to/resource?query=sample&lang=en"

parsedURL = urlparse(a)

print("The components of the URL are:", parsedurl)

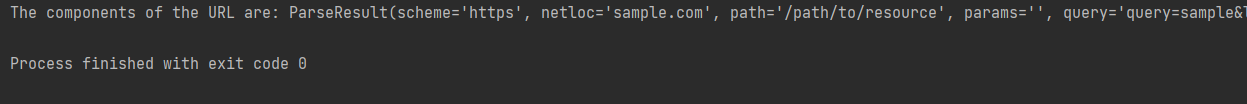

Output:

Explanation:

The urlparse() function returns an object containing the individual components of the URL, which has the following components:

The scheme is https, the domain is sample.com, the path is /path/to/resource, and the query parameters are query=sample&lang=en.

Using the parse_qs() function

This parse_qs() function parses the query string part of a URL and returns a dictionary of the string components. Programmers often use this integrated with urlparse() as the parse_qs() function does not fetch the query string part of the URL on its own.

All the parameters of this given URL string are the dictionary keys and unique query variable names. These dictionary values are lists of components for each string value. To use the function, programmers need to import the urllib.parse library.

Code Snippet:

from urllib.parse import urlparse

from urllib.parse import parse_qs

a = "https://sample.com/path/to/resource?query=sample&lang=en"

parsedURL = urlparse(a)

print("The components of the URL are:", parsedurl)

parameters = parse_qs(parsedurl.query)

print("Parsed parameters of the URL in dictionary are:", parameters)

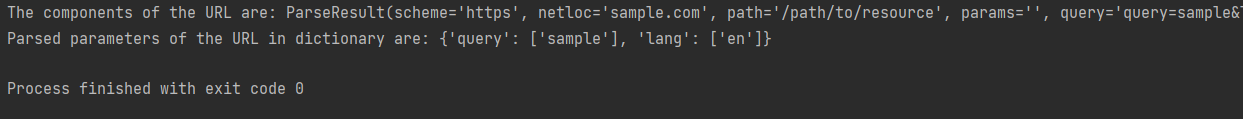

Output:

Explanation:

We have successfully parsed the URL string into separate components using this Python function. Here, we used the urllib.parse library along with urlparse() function. This technique allows programmers to better handle and manipulate URLs in their web development projects.

How to validate the parsing of URLs in Python?

Often programmers create a program and need to validate or ensure whether the URLs are valid. It allows programmers to correctly format the URLs before parsing and using them to prevent errors. Here, programmers will use Python's built-in urllibs.parse library. They can also use other third-party libraries, such as Validators, that find the URL's validity.

Code Snippet:

import validators

a = "https://sample.com/path/to/resource?query=sample&language=english"

if validators.url(a):

print("You will get a valid URL")

else:

print("You will get a invalid URL")

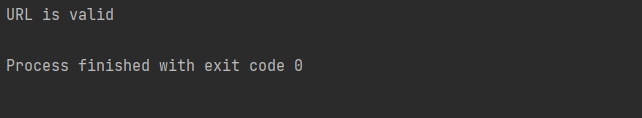

Output:

Explanation:

It is efficient to use the validation process before parsing the URL to avoid errors. It is a trick and best practice for programmers aware of improperly formatted URLs. It will ensure that programs are more stable and less prone to errors or crashes.

How to return all the parameters of the URL in parsing?

Code Snippet:

import pandas as pd

from urllib.parse import urlparse

a = "https://sample.com/path/to/resource?query=sample&language=english"

def demo_parsing(a):

components = urlparse(a)

directory = components.path.strip('/').split('/')

query = components.query.strip('&').split('&')

ele = {

'scheme': components.scheme,

'netloc': components.netloc,

'path': components.path,

'params': components.params,

'query': components.query,

'fragment': components.fragment,

'directories': directory,

'queries': query,

}

return ele

ele = demo_parsing(a)

print(ele)

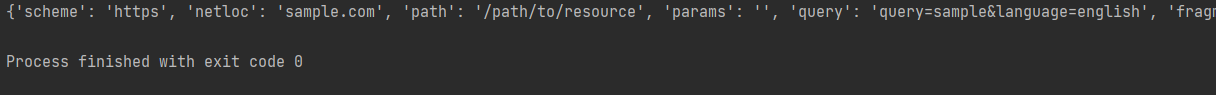

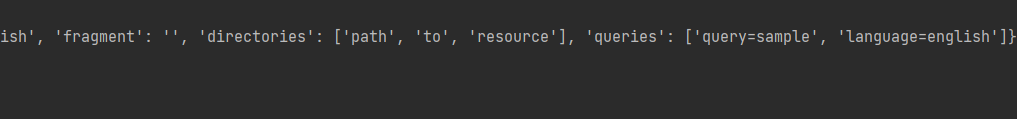

Output:

Explanation:

We initialized a function named demo_parsing() and fed the URL with the directories and queries split into chunks. The output will be in dictionary format, which programmers can easily recognize and manipulate the information according to their needs.

How to handle special characters in Python parsing?

Handling special characters, such as spaces or non-ASCII characters, is essential to encode and decode; while parsing. These ensure the exact parsing and processing of the URLs in Python.

But the crucial fact is programmers must encode these special characters using the percent-encoding format. It will avoid any unexpected behavior or errors in the Python program.

The Python urllib.parse library provides functions such as quote() and unquote() to manage the encoding and decoding of special characters.

Code Snippet:

from urllib.parse import quote, unquote

a = "https%3A//mail.google.com/mail/u/0/%3Ftab%3Drm%23inbox"

encoding_URL = quote(a, safe=':/?&=')

print("After encoding the URL:", encoding_url)

decoding_URL = unquote(encoding_url)

print("After decoding the URL:", decoding_url)

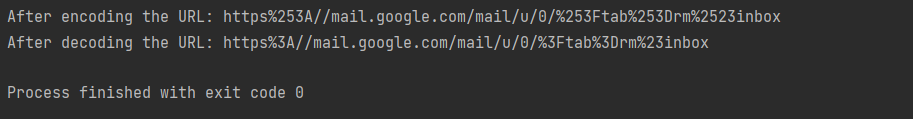

Output:

Explanation:

Here, we used the quote() and unquote() functions to handle all the special characters in the URL. The quote() function replaces special characters in the string using the %xx escape.

But it will not quote the letters, digits, or characters. The unquote() function does the opposite of the quote() function, replacing %xx escapes with the respective single-characters.

Conclusion

Every web developer must have an out-and-out knowledge of how to parse an URL using Python. It allows them to extract, manage, and analyze URLs easily to fetch meaningful information.

This article has highlighted the Python built-in library called urllib.parse that offers functions like urlparse(), parse_qs() function, etc. These functions help break down the URL into components associated with the parameters to extract information, normalize URLs, or modify them for distinct purposes.

Validating the URLs is also accurate and reliable for avoiding errors in the program. Also, the article mentioned how to handle special characters using the quote() and unquote() functions.