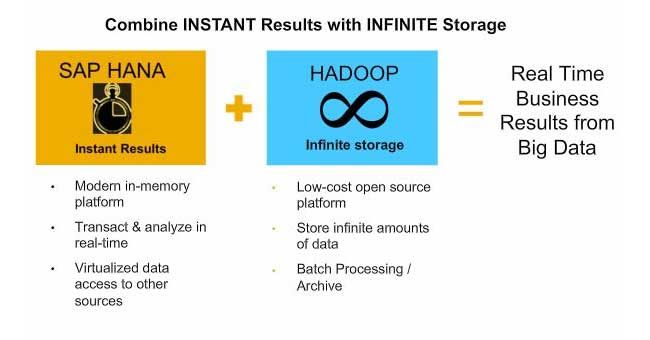

HANA and Hadoop are known to be very friendly however there are quite a few differences between the two of them. While on one hand one can use HANA for storing high-value, typically meant for data related purposes, Hadoop on the other hand is more often used for persisting information to be used for retrieval and to be archived in newer and more innovative ways - especially for all the information which you feel can be preserved in web logs or other sources of information which re quite large.

HANA SP06 can be connected into Hadoop along with batches can also be run in Hadoop to load more information into SAP HANA, all of this will enable you to perform super-fast aggregations on within HANA. This feature of HANA makes it quite co-operative.

Hadoop on the other hand is very competent of handling more theoretical and more of analytic queries. While looking at the documentation from Hadoop distributions which are like Hortonworks or Cloudera, they make it very obvious that this is not the primary purpose of Hadoop, however it has also been made very clear that Hadoop is heading in this direction. Quite ironically as Hadoop heads in this direction. Hadoop has been consciously developed to store structured tables by virtue of using Hive or Impala. Hadoop also uses columnar storage along with the ORC and Parquet file formats within the HDFS file system.

In the above context one can see that HANA and Hadoop are definitely congregating.

When using HANA, one can see a very clear and a good parallelization which can also be seen across a very large system and near-linear scalability. This is interpreted between 9 and 30m aggregations/sec/core all varying on the complexity of the query.

All this research clearly showcases that Cloudera Impala has the best aggregation engine..

Setup Environment

I am currently using one 32-core AWS EC2 Compute Optimized C3.8xlarge 60GB instance. This at one point in time was almost 40% faster core-core than my 40-core HANA system. That certainly makes me feel nice and is a secret too HANA One uses the same tech, and HANA One is also 40% faster core-core than on-premise HANA systems.

I have gone ahead and festooned it out with RedHat Enterprise Linux 6.4 and the default options.

I made a few notes on configuring Cloudera:

- You need to ensure that you set an Elastic IP for your box and also bind it to the primary interface

- Make sure that the port 8080 is open in your security group.

- The selimux has to be disabled by editing /etc/selinux/config and setting SELINUX to disabled

- Make certain that you configure a fully qualified hostname in files /etc/sysconfig/network and /etc/hosts

- You must reboot after the last two steps

- Immobilize during installation using chkconfig iptables off && /etc/init.d/iptables stop

One thing that you need to note during the installation is to use fully qualified hostnames, login to all hosts as ec2-user, and use your AWS Private Key as the Authentication Method. This works in the same manner for Cloudera and Hortonworks.

Testing using Hive

The first thing I did while I was testing using HIVE was to ensure that I created a benchmark for my test using Hive. My test data is concerning some Financial Services market data and I'm using 28m rows for the initial part of testing. With HANA, we usually get 100ms response times when aggregating this data, but let's start small and gradually work up.

I am able to load data very fast in almost 5-10 seconds. This cannot be compared to HANA (which also takes a similar time) because HANA also orders, then compresses and dictionary keys the data when it loads. Hadoop simply dumps it into a file system,while running a simple aggregation when using TEXTFILE storage on Hive runs in around a minute - 600x slower than HANA.

All of this is because Hive is not optimized in any way.

CREATE TABLE trades ( tradetime TIMESTAMP, exch STRING, symb STRING, cond STRING, volume INT, price DOUBLE ) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE;

LOAD DATA LOCAL INPATH '/var/load/trades.csv' INTO TABLE trades;

select symbol, sum(price*volume)/sum(volume) from trades group by symbol;

Moving from Hive to Impala

I faced quite a lot of struggle here because Cloudera 5.0 Beta is more than a little buggy. One can see the Hive tables from Parquet at times, sometimes not. At times it would throw up random errors. This is definitely not meant to be used in the production environment scenario.

While I used Parquet Snappy compression which provided a blend of performance and compression. The tables cannot be loaded directly into Impala – instead these have to be first loaded into Hive and then Impala, which turns out to be quite exasperating at times.

create table trades_parquet like trades;

set PARQUET_COMPRESSION_CODEC=snappy;

insert into trades_parquet select * from trades;

Query: insert into trades_parquet select * from trades

Inserted 28573433 rows in 127.11s

Now we get on to loading at around 220k rows/sec - on equivalent hardware we could expect nearer 5m from HANA. This appears so because Impala doesn't parallelize loading so we are CPU bound in one thread. I've read in many places that they didn't optimize writes for Impala yet so that accounts for it.

select symbol, sum(price*volume)/sum(volume) from trades group by symbol;

The first time this runs, it only takes 40 seconds. However, the next time it runs it takes just 7 seconds (still 70x slower than HANA). I see 4 active CPUs, and so we have 10x less parallelization than HANA, and around 7x less efficiency, which translates to 7x less throughput in multi-user scenarios, at a minimum.

In Essence

With the above drawing I have been able to conclude that Hadoop is very good at consuming data and I'm quite sure that with more nodes it would be even better and good at batch jobs to process data. Hadoop is not any better than HANA in this respect, but this $/GB is much lower of course, and if your data isn't quite valuable to you and you don’t access it quite often then for it to be saved in HANA will be cost-prohibitive.

When talking about aggregating, even in the best case scenario, Hadoop is 7times less efficient on the same hardware, and the number of features that HANA has been designed with, the simplicity of operation and storing data only once - if your data is hot, and can be accessed and aggregated often in different ways, HANA is surely far more superior.

Not to miss HANA has the implausible maturity of HANA's SQL and OLAP engines compared to what Hadoop has to offer along with the fact that Impala is also the fastest engine but it is only supported by Cloudera and is quite immature.

With Smart Data Access, we can also store our hot data in HANA and cold data in Hadoop, which makes HANA and Hadoop very friendly towards each other rather than being a stiff competition.

More about SAP HANA Tutorials